Editor Improvements

The past couple months I've been working on some major improvements to JanusWeb's built-in editor. The engine has had an editor in some form since the very beginning, even before JanusWeb existed, Elation Engine had all the trappings of an editor - you could create new scenes, inspect and add objects to the current scene, and view / change all properties of each object. However, some of that functionality never really made it into the JanusWeb UI, and the editor has been somewhat neglected in favor of people just editing mark-up and managing assets externally, so I decided it was time to start improving that situation.

For the longest time I tried to avoid looking at what engines like Unity and Unreal were doing, because I wanted my implementation to be unique, something that took advantage of the web, rather than just cloning what they were doing. However, as it turns out, this means that what I've built feels unfamiliar to people - they don't know how to use it. So it's probably time to look at all the pieces we have, look at what those established tools have, and figure out what pieces are missing and how they could be arranged better to expose all the functionality in a way that feels familiar to experienced developers, without being too intimidating to new users.

Ingredients

In no particular order, here are the parts that most editors seem to share:

- Project Management (New Scene, Load Scene, Save, Publish, Export, etc)

- Asset Management

- Marketplace

- Code Editor

- Scene tree

- World settings

- Object inspector

- 3D views

- Amination sequencer

- Material editor

- Shader editor

- VFX editor

- Scripting

We've got most of these tools built in some form already, but they haven't really been pulled together into a cohesive UI yet. So let's explore that.

Project Management

As currently implemented, the JanusWeb editor only really concerns itself with the currently-loaded room. We need to extend this so that the editor can be used for entire projects - collections of rooms and assets that can all be used together. We need to be able to create new projects, save the current project, list other existing projects, and switch to other projects. It would also be nice to support exporting of projects, giving you a nice self-contained zip or other archive of all the rooms and assets involved in the project. We could also support publishing of these bundled projects - either to web hosts, ipfs, or as minted NFTs (I know, I know!)

Asset Management

This is one of the areas where the web has a nice advantage over native apps, but also where it's at a bit of a disadvantage. On the web, the idea of pulling in assets from a bunch of different sources using only a URL is normal - you can host your assets on a CDN, or use a service with an API like SketchFab or Google Poly (R.I.P.) who hosts the models for you. However, this can also get sloppy - say you're hot-linking assets from a site that goes offline, or changes their URL structure - you've now got the link rot the web is famous for, and the assets in your virtual world just suddenly go missing. JanusWeb has the concept of asset packs where you can define a JSON file which gives you a reusable set of assets that you can pull into multiple projects, but currently creating these asset packs is a manual process, and it's up to you to generate the index file and host it and the asset files somewhere accessible to your project. You can drag-and-drop assets from your hard drive into the scene, but they disappear once you refresh, since they don't get persisted anywhere. And the worst part is, there's currently no way to save your edits back to your web host - all edits you make in the scene disappear at the end of the session, so if you want to save them you have to manually copy the mark-up and assets somewhere permanent. Not good!

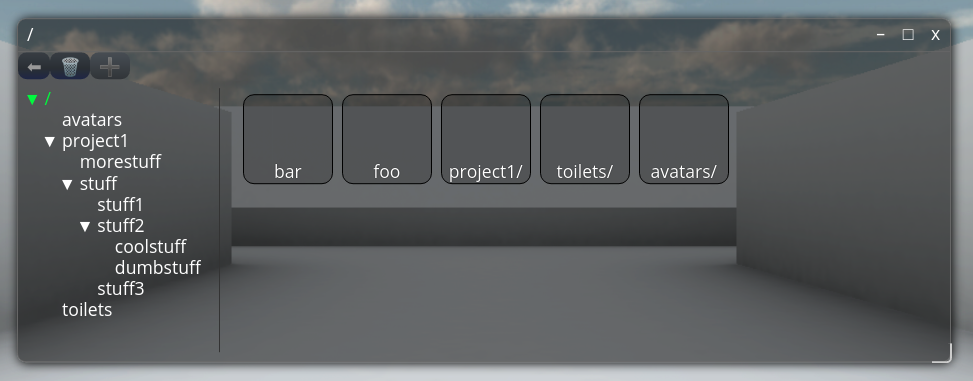

Virtual Filesystems for Virtual Worlds

This is one of the most major improvements I've made to JanusWeb's editor as part of this project. Using the amazing BrowserFS project (https://github.com/jvilk/BrowserFS/) we're able to present a virtual filesystem (VFS) which gives us a NodeJS-style native filesystem, backed by a configurable set of content storage systems. We can now offer persistent storage across page refreshes using the IndexedDB backend, and we can also extend it with support for additional backends - Dropbox, HTML5 FileSystem Access API, and more.

We can host all types of content in this VFS space - rooms, models, images, and any other type of assets we might want to use in our rooms. The engine has been extended with support for janus-vfs:// URLs for assets and rooms, so we can serve complex rooms out of our VFS.

Marketplace

Currently there's no real marketplace for web projects, but several people in the community have been discussing what this might look like. I'd like to build on the asset pack concept we already support, and allow users to add additional asset packs to the editor. Services like Sketchfab are useful too. In either case though, we probably want to be able to pick assets from those asset packs and save them - first into our VFS, and later into permanent storage wherever we're hosting our project.

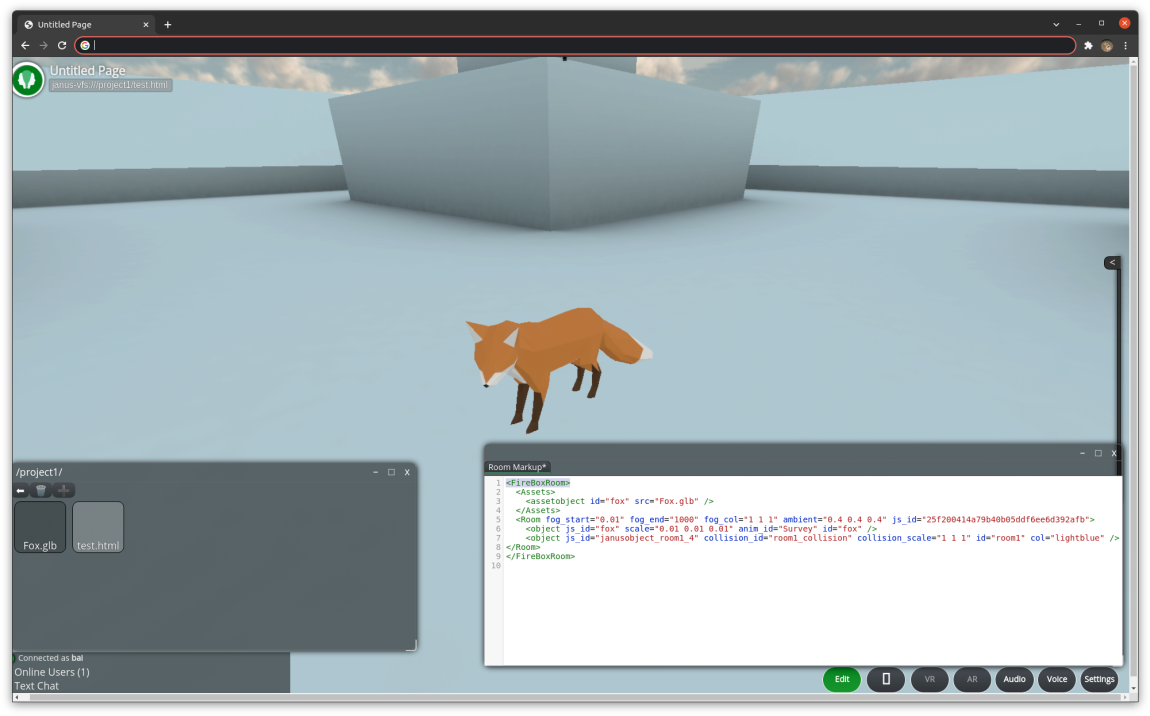

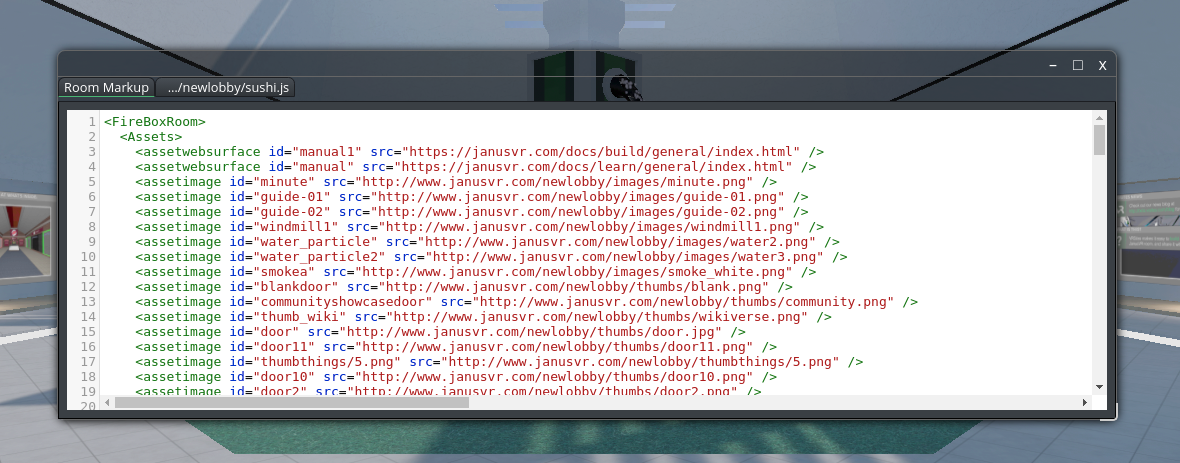

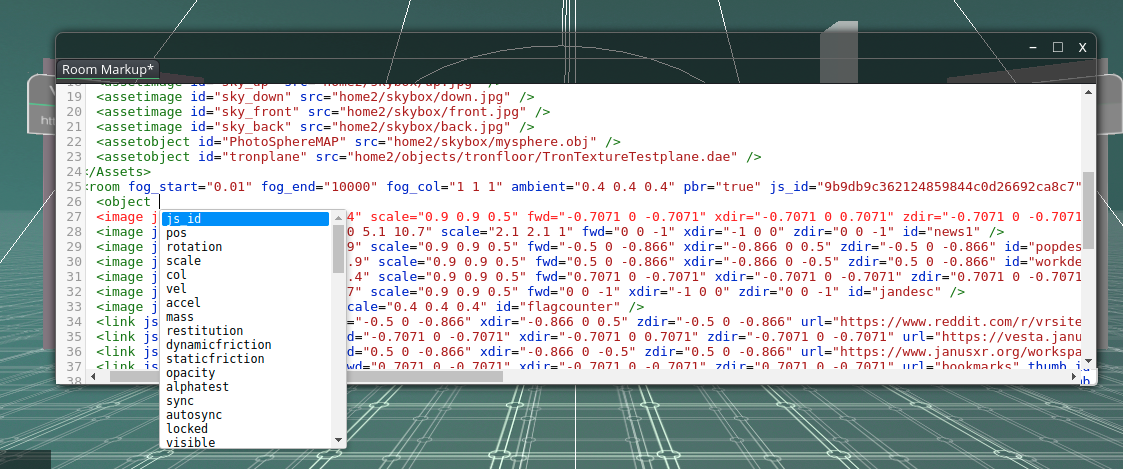

Code Editor

I've started on major improvements to the code editor in JanusWeb. The existing code editor was painfully simple, just a plain <textarea> with some buttons to sync changes to / from the active room. The new code editor supports a tabbed interface for editing multiple HTML or JS files, as well as IntelliSense-style code autocompletion generated from the live codebase. In HTML mode, this gives us a list of which elements are available to us at any given point in our markup, and tells us what attributes they support. When working with an attribute that represents an asset, we can autocomplete values based on what assets are available to the project. We can even autocomplete the <room require="..."> attribute based on the components that are available in the hosted code repository. It's a huge improvement, and exposes a lot of functionality that's previously been completely undocumented. I'm excited to see what others can do with it!

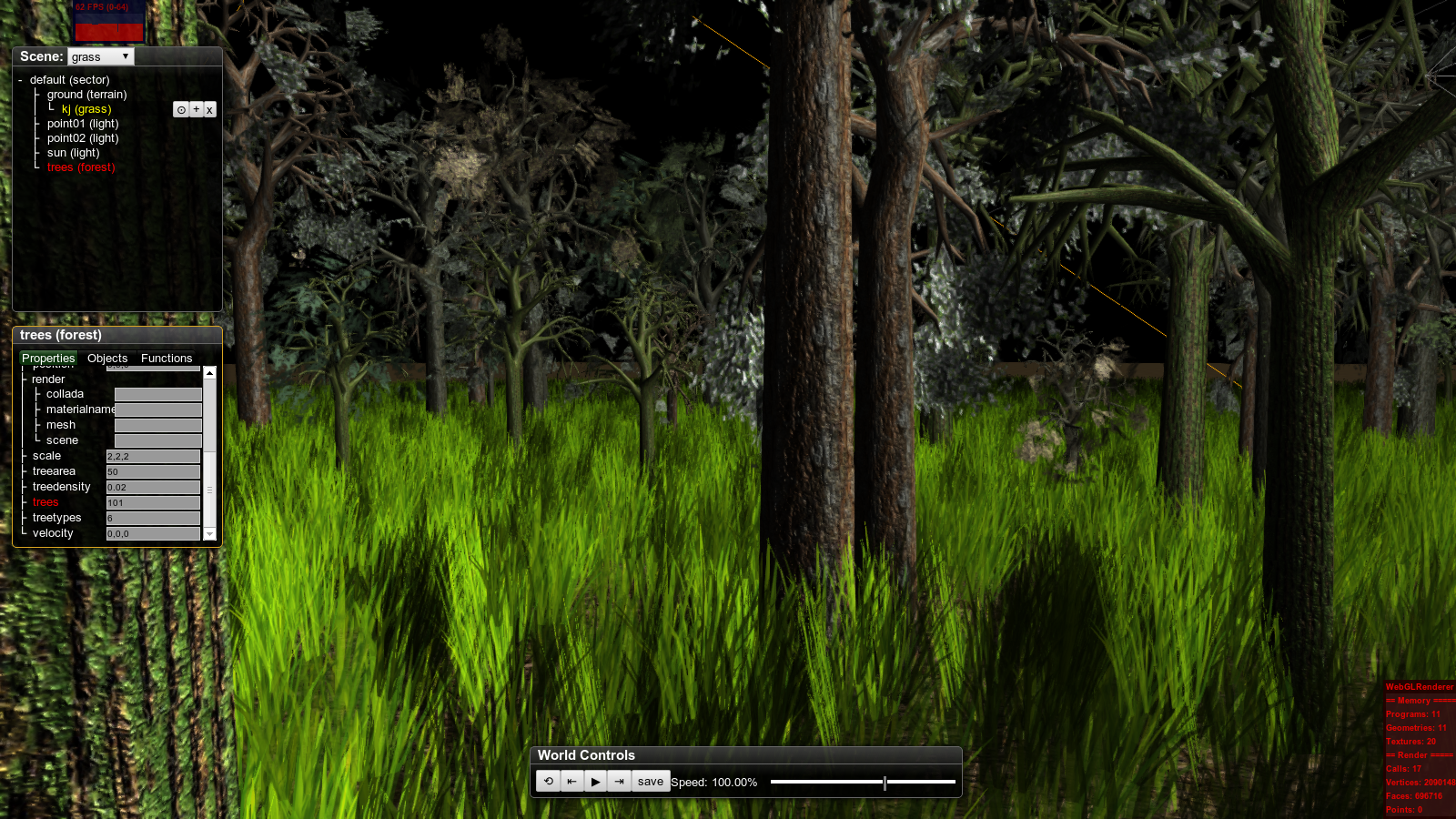

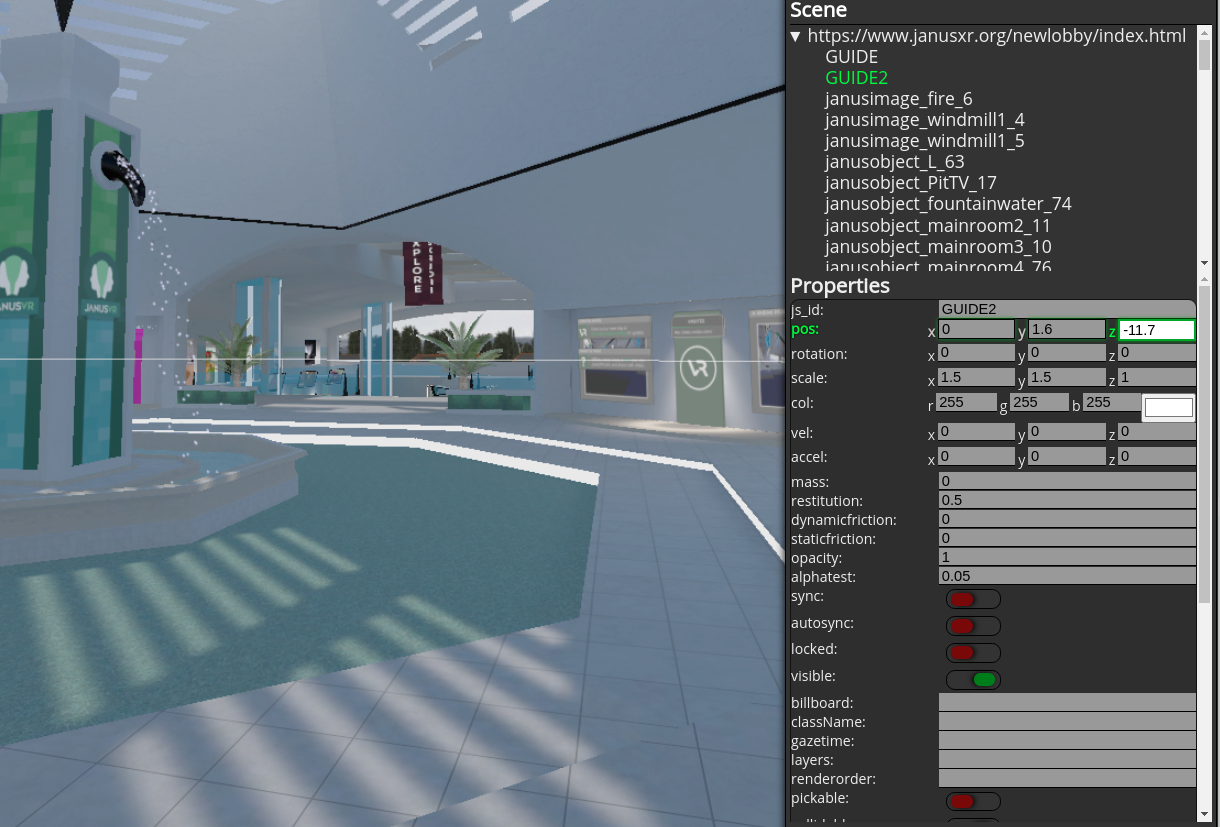

Scene Tree / Object Inspector / World Settings

These two functionalities go hand in hand. The scene tree lets you view at a glance how the objects in your scene are arranged (logically, not spatially). Selecting one of the objects loads it up in the object inspector, allowing you to view and change the values of all of its properties. Both of these already work relatively well in the JanusWeb editor, but could do with some improvements.

Currently, all attributes are just listed out in the order they're defined for each object. There's some basic logical grouping, but each object has dozens of properties, and it's sometimes hard to find the one you're looking for. A strict ECS gets natural grouping based on which component each attribute belongs to, but in our case maybe it would make sense to add a "group" property to each attribute, which would let us organize related properties together. Alternatively, with some refactoring we could clean up our ECS implementation to work more like how Unity and other ECS systems do.

Our object inspector already understands the types of each attribute, and offers up different controls based on the type - for example, color attributes get a color picker, vectors get three input boxes, etc. There's room to improve how each of these input types work, for instance, with color and object pickers. improved vector / direction controls, etc.

Many engines have a separate tab for world settings, but in our case the world settings are just attributes on the room object, which can be inspected just like any other object. We can easily change settings like fog, skybox, bloom, tonemapping, gravity, and other world attributes by just selecting the room from the scene tree.

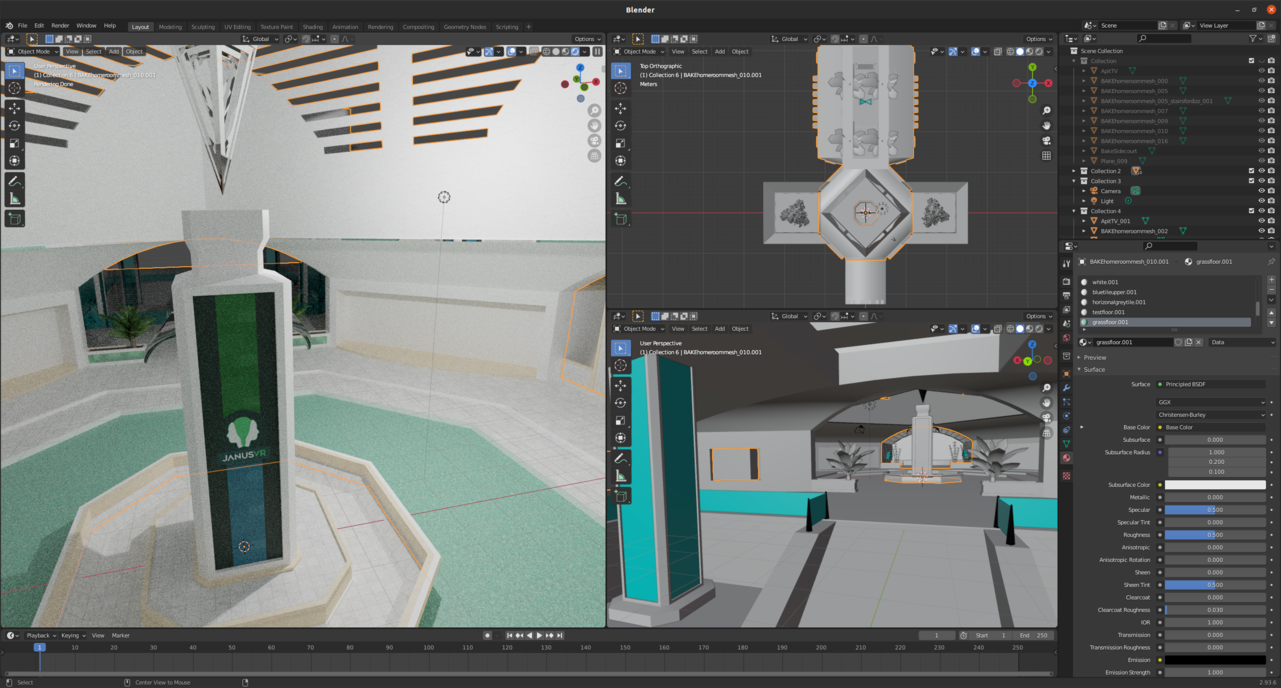

Multiple 3D Views

This is a feature I keep trying to come back to, unfortunately WebGL makes this more difficult than it should be. Since its very first implementation over 10 years ago, the Elation Engine was built around the idea of "views" - the renderer can have any number of views bound to the same scene, and each view can be bound to a different world entity. The original intent here was to make something like a target or missile camera for a space sim - when targeting a ship you'd get a little zoomed-in view of them on your cockpit's VOD displays, and you could apply a different set of postprocessing filters - for example, scanlines, glitch filters, etc. This functionality still works perfectly, but unfortunately secondary views can only be bound to framebuffers, not to separate HTML elements. So even though the view itself is an <elation-engine-systems-render-view> HTML custom element which can be positioned anywhere in our UI, only the "main" view can be bound to a <canvas> element, since we can't share the same WebGL context between canvases. In addition, each canvas only has a single "frontbuffer" - meaning only your main framebuffer gets displayed. All other framebuffers can be used as textures within our canvas but can't escape its boundaries.

This ends up being kind of limiting for some of the more advanced use cases we'd like to do here. I'd like to be able to split the main viewport up into multiple views, and have separate 3d preview vs ortho editor views. Each ortho editor could be bound to an editor camera entity and moved independently within the same scene - much like any native 3d editor would do. This would also be useful for other UI elements within the engine - for example, an avatar selector in the settings window which uses the existing in-memory avatar data, rather than having to create a new instance of the engine and set up a custom scene.

One work-around here would be to extend the view system to use gl viewports, then we could render each view into a quadrant of our canvas. This would work for the simple case of split viewports when all of the viewports are physically adjacent to each other, but it's a lot more limiting - for instance, if we want some UI that surrounds each viewport, we have to leave gaps in our canvas. Another work-around would be to extract the pixel data associated with each view's framebuffer and copy it into a new canvas + WebGL context, but this would be extremely slow. Ideally, short of full context sharing, we'd at least be able to reference our framebuffers as textures across contexts, similar to how modern OSes do zero-copy compositing - but I'm fairly confident that this isn't supported in WebGL. C'est la vive.

Animation Sequencer

A recent addition to JanusWeb is the ability to (finally, reliably) access skeletal and morph target based animations. Now that we have this, it would be incredibly useful to have a built-in animation sequencer, where we could trigger sequences of animations based on player or other object state. mrdoob has created the Frame.js library which might be a useful basis for something like this, and it might also be a good candidate for a node-flow based UI.

Material Editor / Shader Editor / VFX Editor / Scripting

All of these interfaces would also be great candidates for a node-flow based UI. Blender is an excellent example of how powerful a node-based material editor can be, and Three.js has a MeshNodeMaterial upon which this could be built. ShaderFrog is a great example of an in-browser node-based shader editor which is incredibly powerful. Unreal and Unity both have incredibly powerful VFX systems, also done via a node-based UI. Scripting is probably the most complex case here, where a hybrid solution is probably the best options. An implementation of the Blocks programming language might be a good start, but we'd definitely need the ability to extend the system with custom logic blocks if we want it to be powerful enough for advanced users. In the end though, experienced programmers often end up breaking out of these systems and going back to coding in flat files - so whatever system we build should make it easy to switch back and forth between either method.